Web Parsing: An Essential Process for Marketing in 2022

Nowadays, there is consistently growing competition between various online businesses of different niches. Every time a new company appears, it goes through a long process of market analysis and weoptimization to reach the leading positions in the niche. Competitive research is one of the most important aspects necessary for marking your brand unique and implementing the particular features to attract customers. Therefore, parsing is a popular method for a quick search for information that helps grow an efficient company. So, let’s discover why it is widely used in 2022 and what benefits it provides.

What Does Parsing Aim?

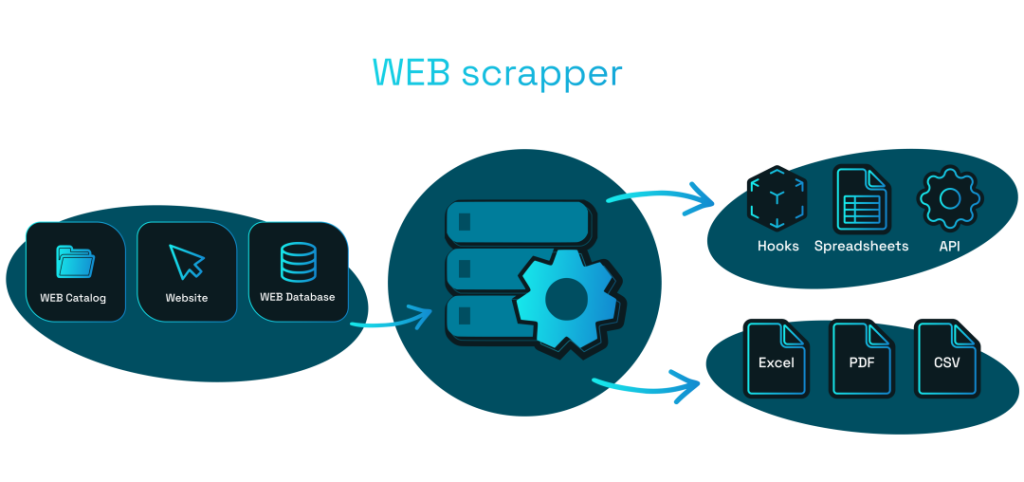

Simply put, it’s the automated data extraction from many websites simultaneously. The information helps the new site improve its structure and correspond to relevant market conditions. Analysts, marketers, and web developers use the gathered data to reach the particular goals:

- Market research: the information is used to understand the current condition in the industry of your company and determine the economic criteria properly (prices and the goods list, etc.).

- Content creation: the data sample helps introduce new content types that efficiently work at the competitor’s site.

- Services catalog improvement: web scraping of the most relevant sites helps define the most necessary products/services people look for and optimize the catalog based on the gathered data.

- Understanding the targeted audience: finding the user categories surfing the websites helps choose the correct promotion strategy.

- Dynamic data analytics: change dynamics in a competitor’s site are revealed, and their detailed study becomes possible.

- Finding links to your site: it becomes possible to determine the number of domains that have outbound links leading to your company’s site.

- Building review database: the information assembled from the specific review forums helps determine similar companies’ powerful and weak features.

Reaching the listed goals by scraping helps endow the website with the essential qualities and improve its performance.

Apparent Advantages

The automated process is always better because of the possibilities to gain the particular benefits:

- quick formation of databases;

- data filtration opportunities;

- absence of the mistakes in the report;

- opportunities to provide scheduled scraping;

- getting optimization tips (optional for each instrument).

The complex advantages make data extraction one of the most popular web analytics and optimization strategies.

Data Types for Research

Here, you can find the list of the most popular types of researched data:

- goods’ descriptions;

- prices/special offers;

- photo/video content;

- reviews/testimonials;

- customer contacts.

The type is specific for each company and depends on how the gathered data will be implemented. It is typically provided by scraping bots that are faster than manual data gathering.

Are There Restrictions of Data Mining?

In general, it is a legal procedure if provided for only reaching business goals without data sharing with the third parties. So, it’s necessary to know what is forbidden if using it:

- DDOS attacks for violation of the site performance.

- Gathering the confidential user data from the account.

- Stealing the content and falsely indicating your authorship.

- Scraping of governmental and secret materials.

The limitations are used to guarantee the unharmful effect of parsing to other websites and make its implementation entirely legal.

What is Self-Parsing?

It’s the similar data mining provided by the company to detect flaws and outdated structural or content elements of its website. The sites are commonly parsed for broken links, missing titles, descriptions, duplicate content, lack of illustrations, etc. This is necessary for the future optimization and the brand’s marketing growth.

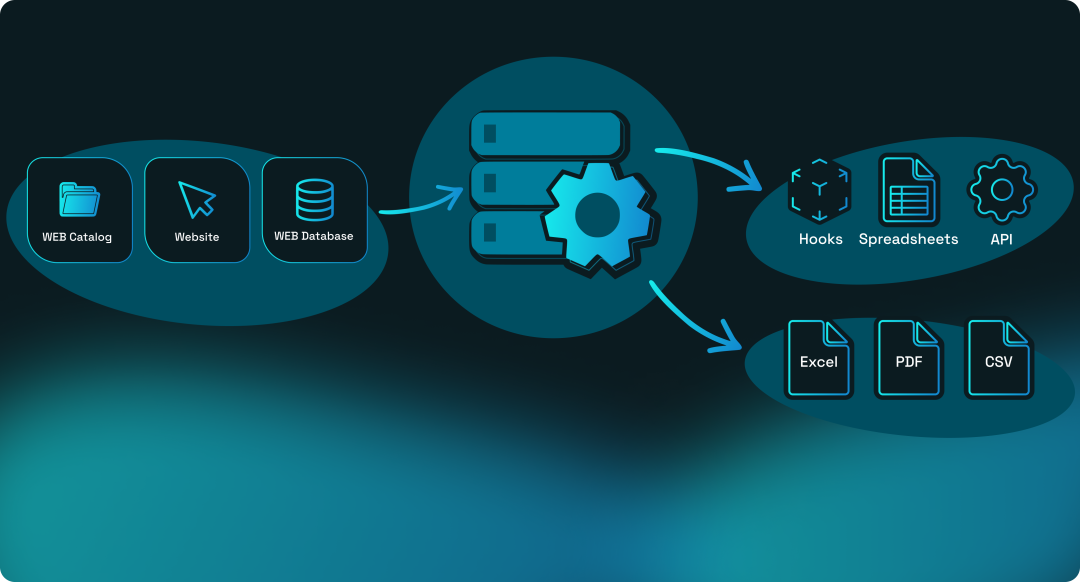

Common Web Crawling Steps

There is no standardized algorithm, and each specialist uses a unique approach. However, there are several obligatory steps:

- Identifying the targeted URLs.

- Choosing a suitable proxy server to overcome limitations for gathering data.

- Sending requests to the URL for getting the HTML code.

- Determining the code parts for data storage.

- Parsing of the code lines with necessary data.

- Conversion of the data into the suitable format.

- Export of the data to convenient storage.

Web scraping agencies use an appropriate algorithm to minimize the risk of procedural failure and to provide both legal and efficient research.

To Sum Up

Overall, parsing is widely used by the site administrators, online marketers, and web developers to get specific data from the websites worldwide. It helps improve the business by implementing new features for better online performance considering the latest trends.